What's New in

JetBrains AI Assistant

2025.2

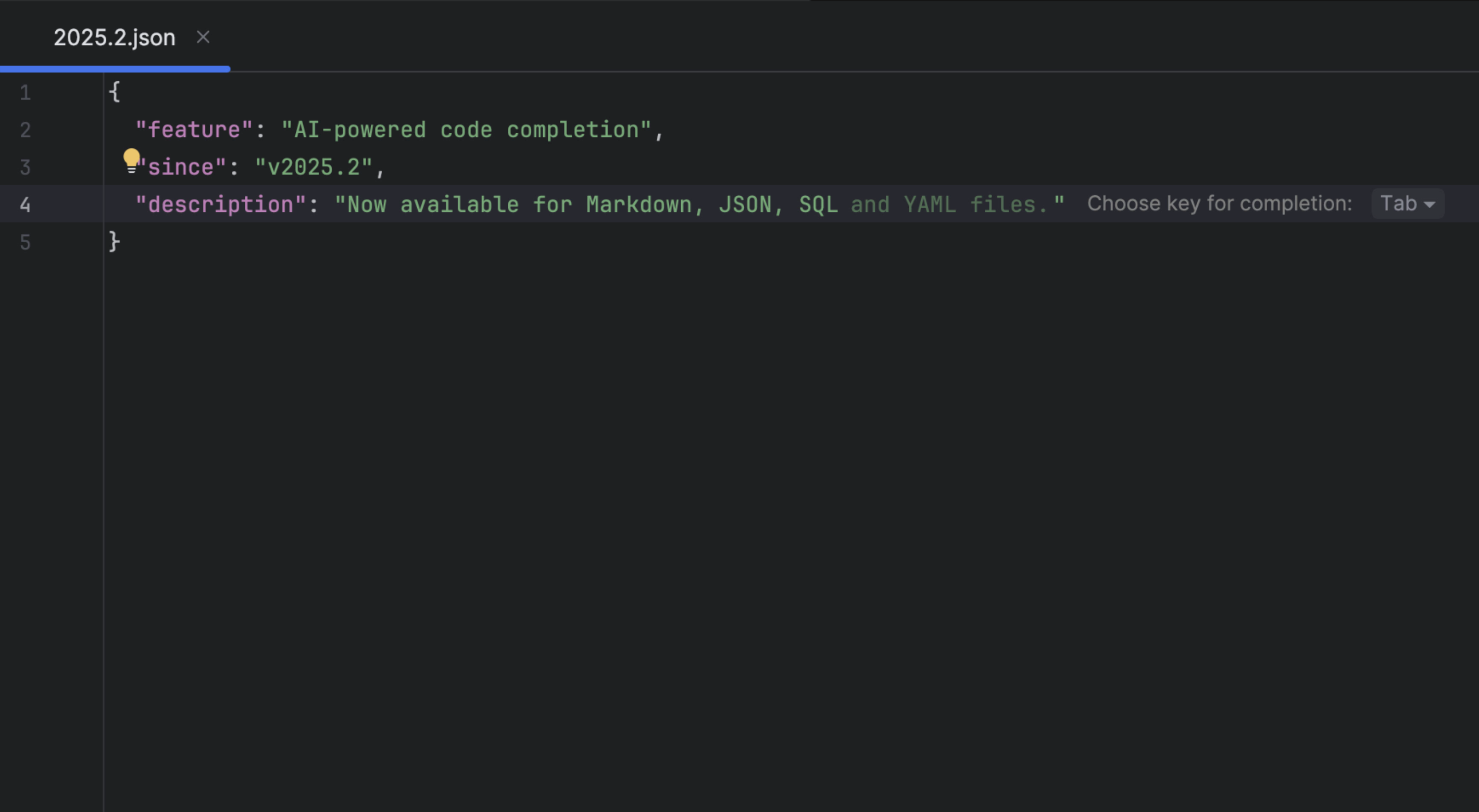

Smarter code completion, greater flexibility

- More file types supported: SQL, YAML, JSON, text, and Markdown join the list.

- Improved suggestions: Thanks to ongoing upgrades to our custom-trained Mellum model and smarter context collection powered by retrieval-augmented generation (RAG), suggestions are now more relevant and accurate.

- Model flexibility: You can now connect your preferred local models for code completion, including Qwen2.5-Coder, DeepSeek-Coder 1.3B, Codestral, or the fine-tuned open-source Mellum.

- Local multiline suggestions are now available in Java and C++.

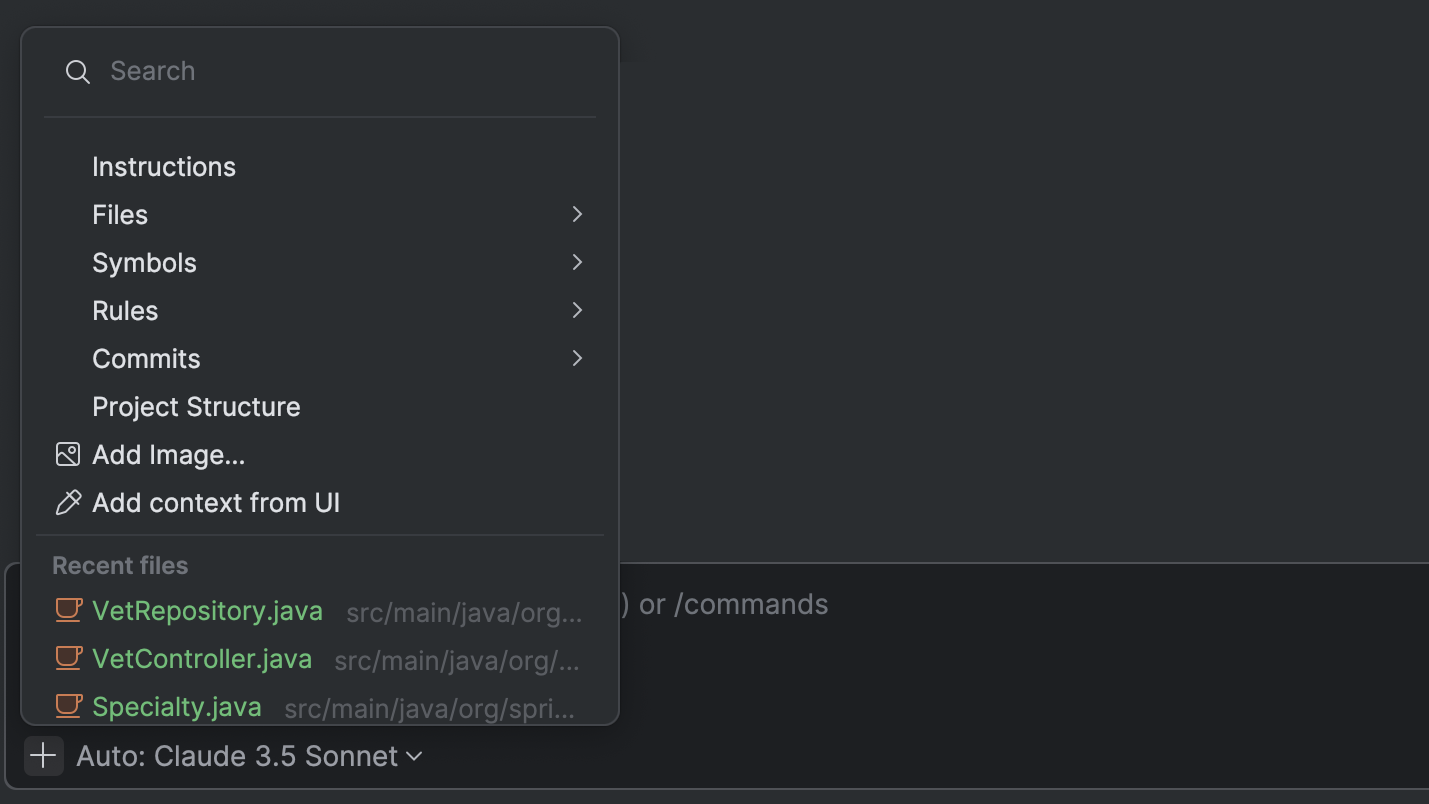

Improved context awareness and control

- Attach images in the AI chat (supported with Anthropic and OpenAI models) – great for sharing errors and diagrams without retyping anything.

- Explicitly reference the project structure from the AI chat.

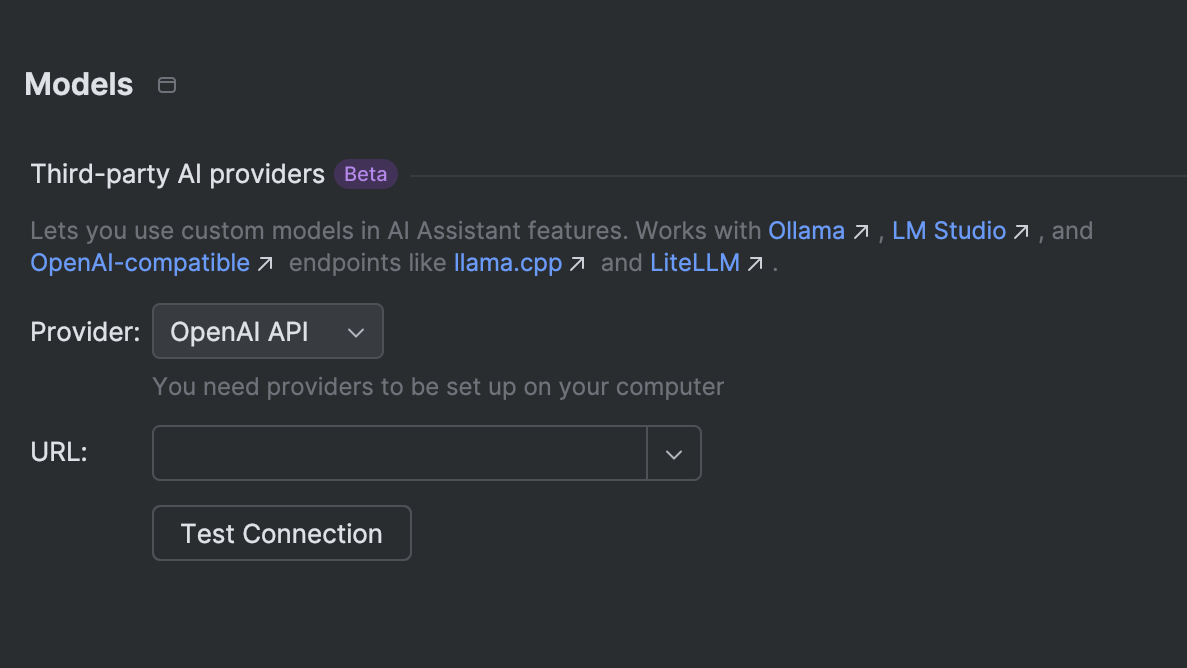

More ways to connect local models via OpenAI-compatible servers

AI Assistant now supports a broader range of local model hosts via OpenAI-compatible APIs, including llama.cpp, LiteLLM, Ollama, and LM Studio.

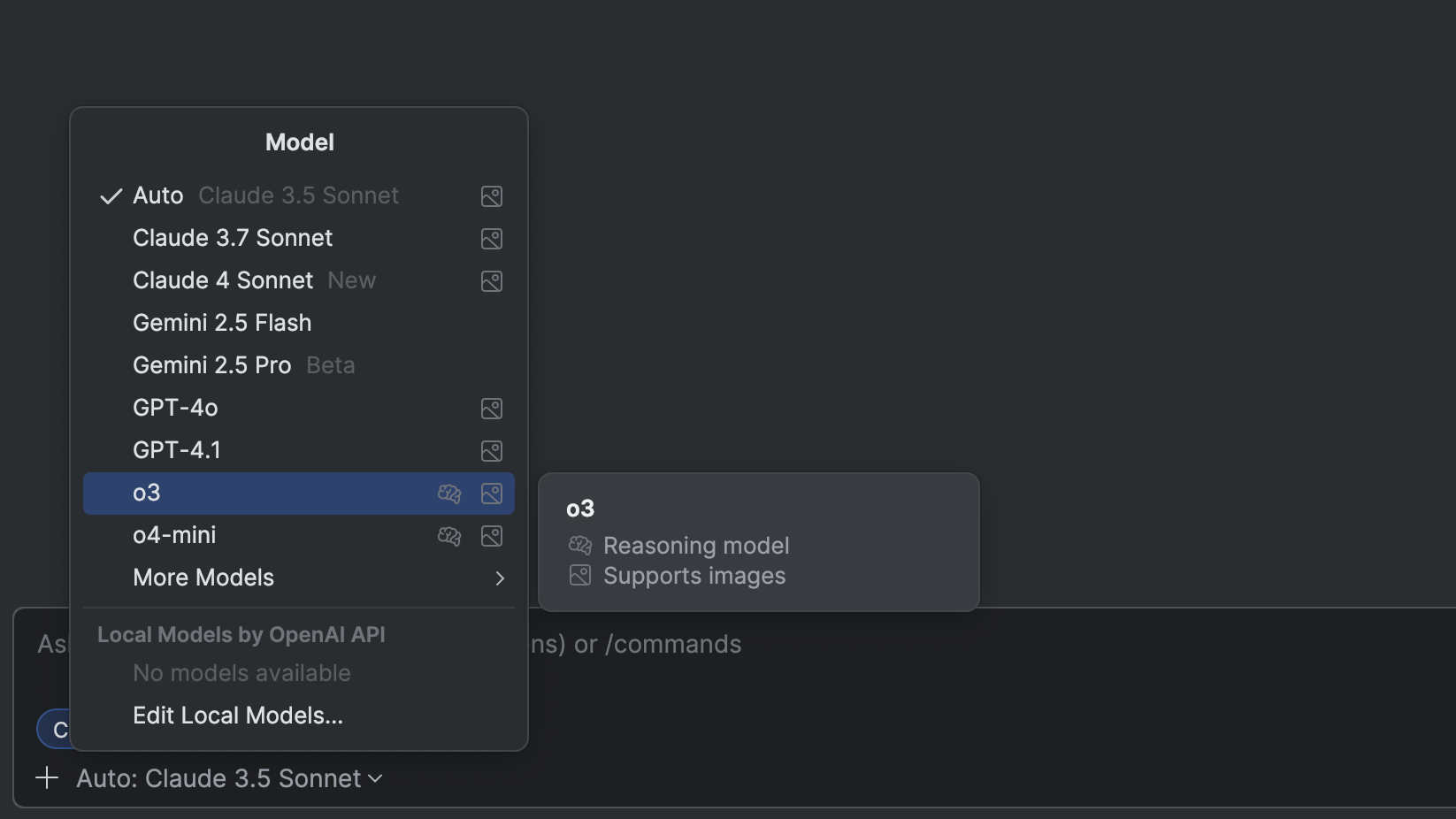

Better guidance for model selection in the AI chat

- Automatic model selection in chat, optimized for performance, accuracy, and cost.

- Visual indicators for each model, displaying cost tier, reasoning capability, and whether it’s in Beta or Experimental status.